The evolution of Cloud storage was the second important trend Steve Ahern observed at IBC24.

Cloud storage has been around for many years, but there is a growing realisation that the cloud is not the harmless soft and fluffy thing that people first thought. The cloud is a series of huge data centres all around the world, connected via the internet through wired networks, cell phone data and satellite delivery.

Because of its scale, ‘the cloud’ is efficient in many ways, but it is also a massive guzzler of power, water and carbon dioxide emitter.

In our broadcast and production industries we use the cloud for editing, storage, filing content to our studios and for operating our SaaS (software as a service) production tools and playout systems. We also use it for transmission, which we call streaming when it is delivered via the internet. Cloud is now embedded in the way we work, so it is timely that cloud suppliers are thinking about future efficiency and sustainability of cloud services.

Live to air broadcast transmission has always been delivered in near real time with the major drawbacks being geographical interference such as hills or the limitations of the coverage area. The broadcast chain goes from studio, to transmitter to receiver over

For streaming there are many more steps in the chain between the broadcaster and the receiver. The studio signal goes via a router to the cloud server and is encoded in various formats for delivery. When requested by the user, the signal goes via the internet, satellite or cell towers to the user’s device. User devices use different standards to decode and deliver the content to a large or small screen, speakers and earphones. During those steps there are many things that can get in the way of efficient delivery and cause buffering or delay the transmission signal, this is referred to as latency.

Low Latency

One of the biggest issues confronting cloud usage is low latency. Buffering, too much delay in beginning a stream or a song and non-real time coverage of live events are the things that annoy audiences most with cloud streaming delivery.

The holy grail for broadcasters is to find the quickest, cheapest, most interruption free method of getting programming to audiences via streams. How that content is stored in the cloud and then delivered to audience devices is where the complexity lies. How streams scale between small and large audience numbers is also a factor.

At IBC, I spoke to StreamGuys, a California based company that provides live and on-demand streaming, podcast delivery, and software-as-a-service (SaaS) toolsets for enterprise-level broadcast media organizations. The company brings together the industry’s best price-to-performance ratio, a robust and reliable network, and an infinitely scalable cloud-based platform for clients of any size to process, deliver, monetize and playout professional streaming content.

Eduardo Martinez showed me the company’s latest advances on delivering low latency audio and video content that adapts bandwidth to the pipes and devices used by each consumer to deliver streamed content in close to real time.

“StreamGuys is a content delivery network and streaming media company. We’re focused on multiple assets of the entire broadcast ecosystem. Here at IBC 2024, we’re showcasing our ultra-low latency video solutions, also applicable to radio,” said Martinez.

With so many studios now visualising their content via video streams, as well as just streaming and broadcasting the audio, studio cameras now also have to be thought about by engineers designing studios. In the recent ABC Radio Sydney studio build for Parramatta we faced this issue in each of our radio broadcast studios, so it was top of mind for me in the conversation with Martinez.

“Capturing that banter between radio hosts and the guests in the studio is now a different facet of the radio experience. Bringing that to the end consumers is something that we recommend stations do a lot more because it the audio and visual human elements,” he told me.

“If you can send us an RTMP, a video feed, or SRT, we can take that and transmux it server-side, then deploy a WebSocket-based protocol to keep that latency down to around one second. It’s really just a matter of connecting any encoder that you might have on-premise, sending us a video feed, and receiving a link with a player that has that ultra-low latency embedded. It’s very stress-free for the broadcaster.”

Cloud Storage

All of the big tech players provide cloud storage facilities. One of the most prominent at IBC24 was Amazon Web Services (AWS).

AWS has been offering cloud services for broadcasters for some years now and has earned a reputation for working with broadcasters to develop tools that are suited to them. Many broadcasters have told me that Amazon has real people to talk to and is responsive to ideas for development and delivery of broadcast services that meet the broadcasters needs, unlike some other cloud providers who are far less responsive. This approach was evident in the AWS stand at IBC, where AWS had a massive stand and plenty of people to talk to about the services offered.

Since launching in 2006, Amazon Web Services has been providing cloud computing technologies that help organizations “build solutions to transform industries, communities, and lives for the better.” Cloud computing is the on-demand delivery of IT resources over the Internet with pay-as-you-go pricing. Instead of buying, owning, and maintaining physical data centres and servers, companies can access technology services, such as computing power, storage, and databases, on an as-needed basis.

Broadcasters use Amazon’s cloud for content storage, online collaborative production, and content delivery. AWS works with other technology providers at the ingest and delivery stages of the broadcast process.

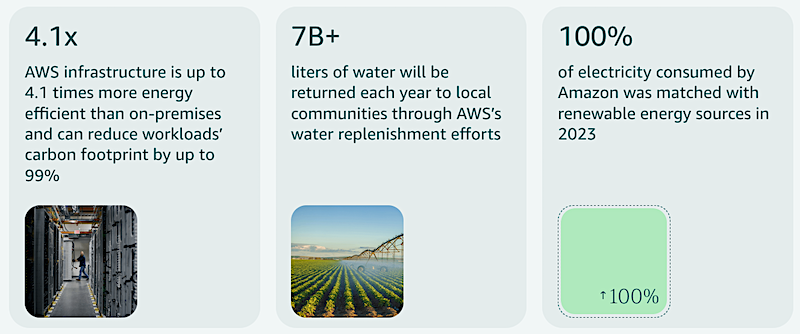

Amazon is trying to become more sustainable in its data centres, and is trying to be more transparent about its energy usage and sustainability goals.

Cloud Security

Another priority at IBC this year was the security of broadcasters’ cloud services. Hacking, scams, data theft and unauthorised AI training have all become higher priorities for the technology suppliers I talked to, because broadcasters are demanding more security for their content. Penalties for data breaches and more regulations about protecting customer privacy have made broadcasters, rightly, more cautious and they expect their cloud suppliers to deliver constantly updated security.

RCS, one of the first radio companies to use to the cloud for service continuity, SaaS operations and adaptive split services, was showing the latest versions of its Selector and Zetta products, which have high levels of security to protect client data and ensure fast recovery of services from the cloud if needed.

“We have proven that live radio from the cloud is possible,” RCS’s Sven Andrea told me.

Olympics

With the Olympics just ended, one of the most interesting presentations was hosted by Rizwan Hanid, Cisco’s Head of Sports & Entertainment, who brought together key technology players who were responsible for coverage of the Paris Olympics.

This year the Olympic opening ceremony was held not in a stadium but outside, along the river Seine, presenting many logistical challenges for broadcast coverage. Using cloud technology at the heart of a complex web of outside broadcast tools, the Olympic Broadcasting Services (OBS) team was able to use a range of tools to cover the massive sporting event.

When Olympic rights holders started using data centres in Beijing 2018, “some broadcasters were very sceptical” about whether cloud could be used for HD, according to OBS Chief Executive Yiannis Exarchos. In Paris, the source format was UHD HDR immersive 5.4.1 sound and the majority of transmission was hosted in the cloud. Each country’s boat was equipped with a Samsung Galaxy S24 Ultra smartphone to share onboard footage via an exclusive 5G network powered by Orange.

For Paris 2024, OBS produced over 11,000 hours of content, more than any previous Olympic Games, a 15.8% increase on the Tokyo 2020 games. There was more athlete-centric coverage and behind- the-scenes material. Cinematic lenses with a shallow depth of field were used to enhance the overall visual experience for the viewer. New dynamic, data-driven graphics were used to display athletes’ performances in minute detail, while a multitude of camera angles to fully immerse fans.

Green Cloud

There are many factors surrounding cost and ecological sustainability when judging whether to use cloud services. If you audience is consistent and located in a specific geographic area, broadcast transmission may be the most efficient and scalable way to deliver your content from a cost and environmental sustainable viewpoint. If your audience is large and world wide and changes between very small and very large numbers, then cloud storage, scalability and delivery may be more efficient.

We have known the costs for maintaining and powering our transmitters since broadcasting began… we pay the electricity and maintenance bills directly. But it is difficult to compare cloud transmission with broadcast transmission because many of the costs are hidden or distributed across multiple steps in the streaming chain. Free to air broadcast transmission costs are fixed no matter how small or large your audience is, but the more successful you are with streaming, the more your costs will increase.

With the introduction of AI, emissions, costs and processing power have increased exponentially.

I asked questions and did some research, but the comparative costs and sustainability of cloud delivery is very difficult to find out, making it very difficult to compare with broadcast. When evaluating whether to use broadcast transmission or cloud, you have to take many factors into account, here are some of them:

- Cost

- Environmental impact

- Scalability

- Accessibility

- Collaboration

- One way or two way interactivity

- Audience measurement and consumption data

- Backups and disaster recovery

- Ability to use AI

- Security and privacy

- Performance

- Latency

- Control of your own transmission system

- Equipment maintenance costs and issues

- Owning your audience

- Regulatory compliance, promise of performance

- Keeping on-premise software updated, compared with SaaS software updates from cloud

After discussing green cloud pros and cons with exhibitors I used their advice to calculate some comparative costs. These are indicative only and of course will continually change as we pursue more sustainable content delivery, but here is my snapshot of comparative costs, emissions and water consumption based on figures from major cloud suppliers (listed at the bottom of this article).

Comparison Summary (per hour, based on 10,000 viewers/listeners)

- AWS Live Streaming: 720 kg CO2 emissions, 2,700 Litres of water

- Google Cloud Live Stream: 700 kg CO2 emissions, 2,600 Litres of water

- IBM Cloud Video: 710 kg CO2 emissions, 2,650 Litres of water

- Satellite TV Broadcasting: 600 kg CO2 emissions, space debris impact

- Terrestrial TV Broadcasting: 480 kg CO2 emissions, minimal water, electromagnetic radiation, land usage

- Radio Broadcasting (FM): 160 kg CO2 emissions, minimal water, electromagnetic radiation, land usage. AM costs and emissions are higher, digital radio costs are lower when amortised across multiple channels in each multiplex

One of the most significant sessions that discussed the sustainability impacts of the cloud for broadcasters was Ecoflow, an energy conserving and measuring project proposed by Humans Not Robots and Accedo.tv, with support from Champions ITV and the BBC.

As Humans Not Robots CEO Kristan Bullett explained: “If you can’t measure something, you can’t improve it.

“Measurement of power and environmental impact is extremely challenging. We are building on the activities of organisations doing live streaming and putting a framework in place that allows cross-organisation understanding and ensuring we’re using the right tools and the right measurement techniques so that we have confidence in those metrics. And, more importantly, not just being an engineering problem, but enabling the executive teams to articulate the environmental impact to the industry. And, of course, very importantly, allowing end consumers to understand what that means as well.”

The Ecoflow IBC Accelerator Challenge measured the energy performance of key elements of the content supply chain such as CDN, encoding, transcoding and advertising delivery to create a base measurement. With that base as a starting point, Champions tested proposed power-saving features to determine the impact within the broader supply chain. It determined and demonstrated opportunities to make processing, streaming and media consumption more measurable and sustainable.

Read more about the project here. Click the picture to play the video of the session.

Related article: IBC Trends 1: Artificial Intelligence

Disclosure: Steve Ahern is the Chairman of the Green Ears initiative, a sustainability project uniting broadcasters, podcasters, suppliers and audio producers in the Australian audio industry who want to improve their sustainability.

Reference sources

- https://cloud.google.com/livestream/pricing

- https://www.oracle.com/au/cloud/streaming/pricing

- https://docs.aws.amazon.com/solutions/latest/live-streaming-on-aws/cost.html

- https://www.dacast.com/blog/cloud-video-streaming

- https://azure.microsoft.com/en-us/pricing/details/media-services

- https://www.cloudzero.com/blog/netflix-aws

- https://cloud.google.com/pricing

- https://azure.microsoft.com/en-us/pricing/details/cloud-services